Kubernetes Architecture: Understanding the Fundamentals

In this article, I will provide a comprehensive guide on Kubernetes architecture. My aim is to explain each Kubernetes component in detail with illustrations, so that you can understand the architecture of Kubernetes and grasp the fundamental concepts underlying Kubernetes.

This guide will cover Kubernetes’ core components, including the Control Plane and Worker Node, as well as the workflows that connect these components. We will also explore Kubernetes Cluster Addon Components, such as the CNI Plugin, and answer frequently asked questions about Kubernetes architecture. Whether you're a beginner or an experienced user, this guide will provide valuable insight into Kubernetes architecture.

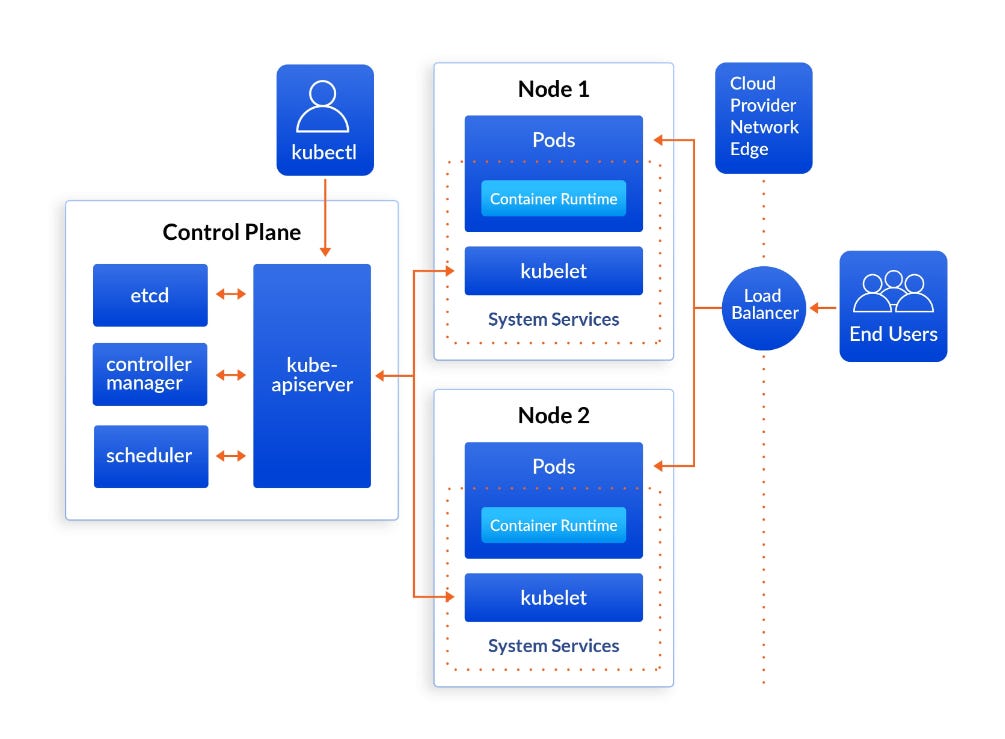

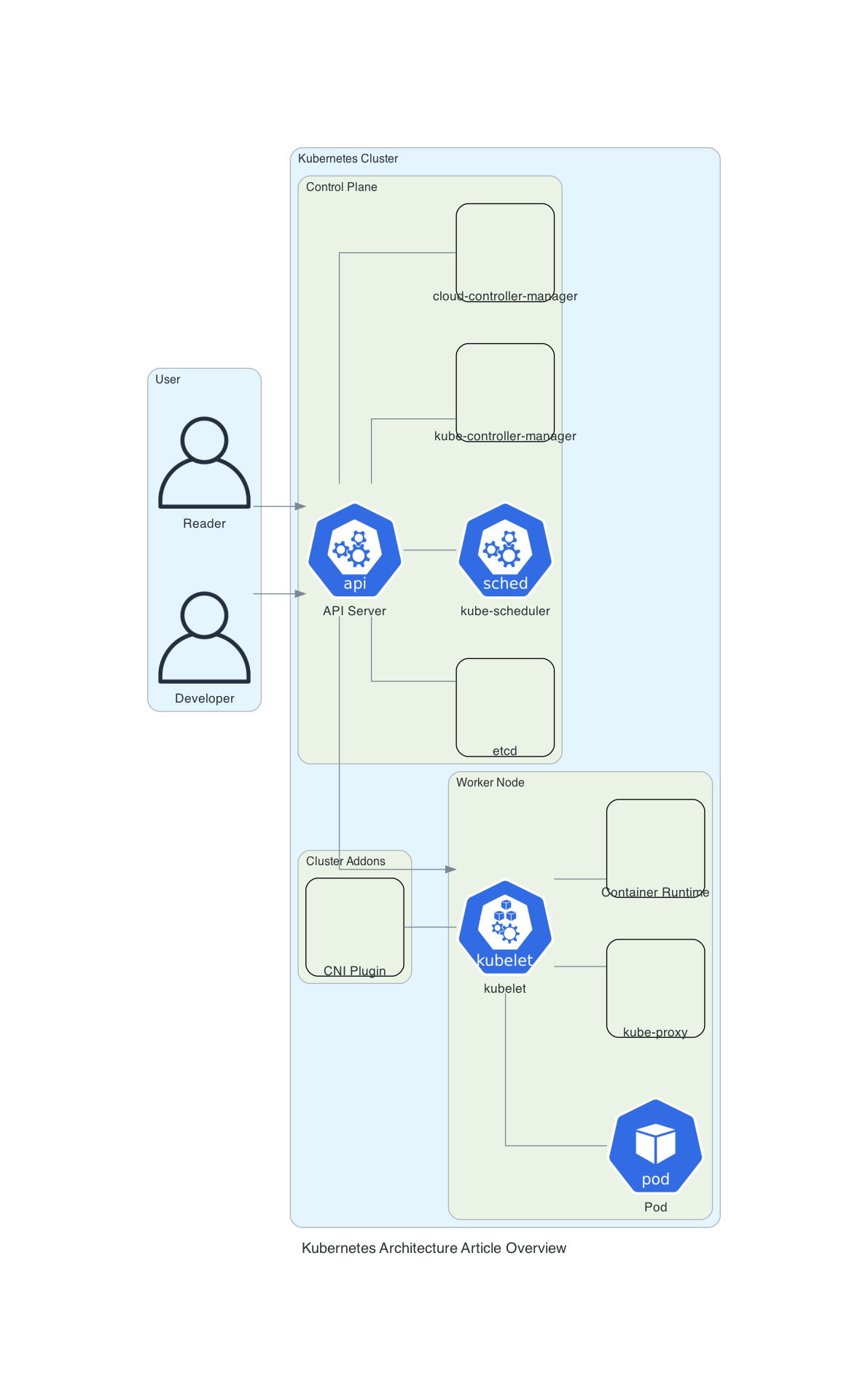

Kubernetes Architecture

As a distributed system, Kubernetes comprises multiple components spread across different servers over a network, forming a Kubernetes cluster. The cluster consists of two types of nodes: Control Plane and Worker Nodes.

Control Plane

The Control Plane is responsible for container orchestration and maintaining the desired state of the cluster. It consists of the following components:

kube-apiserver: The Kubernetes API server that exposes the Kubernetes API.

etcd: A distributed key-value store that stores the cluster state.

kube-scheduler: Assigns workloads to specific nodes in the cluster.

kube-controller-manager: Monitors the state of the cluster and makes necessary changes to ensure it remains in the desired state.

cloud-controller-manager: Integrates with cloud provider APIs to manage cloud resources.

Worker Node

The Worker Nodes are responsible for running containerized applications. The Worker Node consists of the following components:

kubelet: Communicates with the Control Plane and manages containers on the node.

kube-proxy: Routes traffic to the appropriate container.

Container runtime: Runs containers on the node.

This architecture diagram shows all the components of the Kubernetes cluster and how external systems connect to the Kubernetes cluster.

Kubernetes Control Plane Components

1. Kube-apiserver

The kube-apiserver is the central hub of the Kubernetes cluster that provides the Kubernetes API. It is the primary component that handles all API requests from end-users and other cluster components. The API server communicates with other components using gRPC, while end-users and third-party services use the API server through HTTP REST APIs. The API server is responsible for API management, authentication, authorization, and processing API requests. It is the only component that communicates with etcd and coordinates all processes between the control plane and worker node components. The API server also has a built-in bastion apiserver proxy that enables access to ClusterIP services from outside the cluster.

2. Etcd

Etcd is a strongly consistent, distributed key-value store that acts as both a backend service discovery and a database. It is the brain of the Kubernetes cluster and is responsible for storing all configurations, states, and metadata of Kubernetes objects. Etcd uses the raft consensus algorithm for strong consistency and availability, and it works in a leader-member fashion for high availability and to withstand node failures. Kubernetes uses etcd to store all objects under the /registry directory key in key-value format. Etcd exposes key-value API using gRPC, and the gRPC gateway is a RESTful proxy that translates all the HTTP API calls into gRPC messages.

3. Kube-scheduler

The kube-scheduler is responsible for scheduling Kubernetes pods on worker nodes. It selects the best node for a pod based on its requirements using filtering and scoring operations. The scheduler filters the best-suited nodes where the pod can be scheduled and ranks the nodes by assigning a score to the filtered worker nodes. The scheduler makes the scoring by calling multiple scheduling plugins, and the worker node with the highest rank will be selected for scheduling the pod. Once the node is selected, the scheduler creates a binding event in the API server. The scheduler always places the high-priority pods ahead of the low-priority pods for scheduling.

4. Kube Controller Manager

The kube controller manager is responsible for running infinite control loops that watch the actual and desired state of objects. It ensures that the Kubernetes resource/object is in the desired state by reconciling the differences between the actual and desired state. The controller manager runs several controllers, including the node controller, replication controller, endpoints controller, and service account and token controllers.

5. Cloud Controller Manager (CCM)

The cloud controller manager (CCM) is responsible for managing the cloud provider-specific control loops. It provides a way to integrate Kubernetes with cloud providers, and it runs cloud-specific controllers, such as the node controller, route controller, and load balancer controller. CCM enables Kubernetes to take advantage of cloud provider features, such as load balancers, block storage, and network routing.

Kubernetes Worker Node Components

1. Kubelet

Kubelet is an agent component that runs on every node in the Kubernetes cluster. It is not run as a container, but rather as a daemon managed by systemd.

The primary responsibility of kubelet is to register worker nodes with the API server and work with the podSpec (Pod specification – YAML or JSON) primarily from the API server. The podSpec defines the containers that should run inside the pod, their resources (e.g. CPU and memory limits), and other settings such as environment variables, volumes, and labels.

Kubelet creates, modifies, and deletes containers for the pod. It is also responsible for handling liveliness, readiness, and startup probes. Additionally, kubelet is responsible for mounting volumes by reading pod configuration and creating respective directories on the host for the volume mount. Furthermore, kubelet collects and reports Node and pod status via calls to the API server.

Kubelet is also a controller where it watches for pod changes and utilizes the node’s container runtime to pull images, run containers, etc. Other than PodSpecs from the API server, kubelet can accept podSpec from a file, HTTP endpoint, and HTTP server. A good example of “podSpec from a file” is Kubernetes static pods. Static pods are controlled by kubelet, not the API servers.

2. Kube Proxy

Kube-proxy is a daemon that runs on every node as a daemonset. It is a proxy component that implements the Kubernetes Services concept for pods. It primarily proxies UDP, TCP, and SCTP and does not understand HTTP.

When you expose pods using a Service (ClusterIP), Kube-proxy creates network rules to send traffic to the backend pods (endpoints) grouped under the Service object. Meaning, all the load balancing, and service discovery are handled by the Kube proxy.

Kube-proxy talks to the API server to get the details about the Service (ClusterIP) and respective pod IPs & ports (endpoints). It also monitors for changes in service and endpoints.

Kube-proxy then uses any one of the following modes to create/update rules for routing traffic to pods behind a Service:

IPTables: It is the default mode. In IPTables mode, the traffic is handled by IPtable rules. This means that for each service, IPtable rules are created. These rules capture the traffic coming to the ClusterIP and then forward it to the backend pods.

IPVS: For clusters with services exceeding 1000, IPVS offers performance improvement. It supports the following load-balancing algorithms for the backend.

Userspace (legacy & not recommended)

Kernelspace: This mode is only for windows systems.

3. Container Runtime

Container runtime runs on all the nodes in the Kubernetes cluster. It is responsible for pulling images from container registries, running containers, allocating and isolating resources for containers, and managing the entire lifecycle of a container on a host.

Kubernetes supports multiple container runtimes (CRI-O, Docker Engine, containerd, etc) that are compliant with Container Runtime Interface (CRI). This means, all these container runtimes implement the CRI interface and expose gRPC CRI APIs (runtime and image service endpoints).

The kubelet agent is responsible for interacting with the container runtime using CRI APIs to manage the lifecycle of a container. It also gets all the container information from the container runtime and provides it to the control plane.

Kubernetes Cluster Addon Components

1. CNI Plugin

As part of the Kubernetes cluster, addon components are required to be fully operational. The selection of addon components depends on the project requirements and use cases. One of the popular addon components that you might need on a cluster is the Container Network Interface (CNI) Plugin.

CNI is a plugin-based architecture with vendor-neutral specifications and libraries for creating network interfaces for Containers. It is not specific to Kubernetes and can be standardized across container orchestration tools like Kubernetes, Mesos, CloudFoundry, Podman, Docker, etc. CNI is also known for its ability to provide a wide range of networking capabilities, allowing users to choose a networking solution that best fits their needs from different providers.

When it comes to Kubernetes, the Kube-controller-manager is responsible for assigning pod CIDR to each node. Each pod gets a unique IP address from the pod CIDR. Kubelet interacts with container runtime to launch the scheduled pod. The CRI plugin which is part of the Container runtime interacts with the CNI plugin to configure the pod network. CNI Plugin enables networking between pods spread across the same or different nodes using an overlay network.

Some of the high-level functionalities provided by CNI plugins include pod networking and pod network security & isolation using Network Policies to control the traffic flow between pods and between namespaces. Popular CNI plugins include Calico, Flannel, Weave Net, Cilium (Uses eBPF), Amazon VPC CNI (For AWS VPC), and Azure CNI (For Azure Virtual network).

Kubernetes networking is a big topic and it differs based on the hosting platforms.

Kubernetes Architecture FAQs

The Purpose of the Kubernetes Control Plane

The Kubernetes control plane is responsible for ensuring that the cluster and the applications running on it are in the desired state. The control plane is made up of components such as the API server, etcd, Scheduler, and controller manager.

The Purpose of Worker Nodes in a Kubernetes Cluster

Worker nodes are the servers that run containers in a Kubernetes cluster. They are managed by the control plane and receive instructions on how to run the containers that are part of pods.

Securing Communication Between the Control Plane and Worker Nodes in Kubernetes

Communication between the control plane and worker nodes in Kubernetes is secured using PKI certificates. Communication between different components happens over TLS, ensuring that only trusted components can communicate with each other.

The Purpose of the etcd Key-Value Store in Kubernetes

The etcd key-value store in Kubernetes primarily stores information about Kubernetes objects, cluster information, node information, and configuration data of the cluster. It also stores the desired state of the applications running on the cluster.

What Happens to Kubernetes Applications if etcd Goes Down?

If etcd experiences an outage, it will not be possible to create or update any objects in Kubernetes. However, running applications will not be affected by the outage.

Conclusion

By understanding the Kubernetes architecture, I am able to implement and operate Kubernetes on a day-to-day basis. This knowledge is essential for running and troubleshooting applications in a production-level cluster setup. Step-by-step Kubernetes tutorials have provided me with hands-on experience with Kubernetes objects and resources, further enhancing my understanding of this powerful technology.